ELK

二进制方式

Elasticsearch

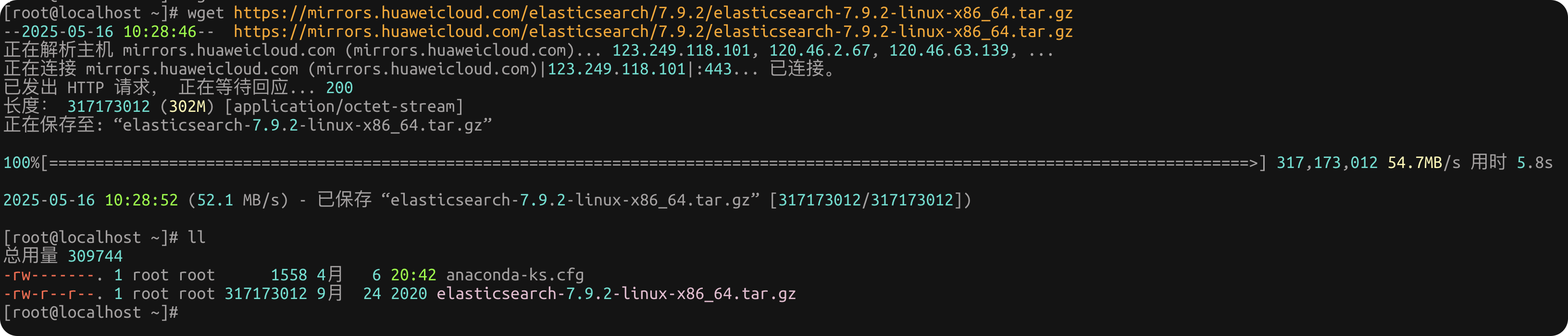

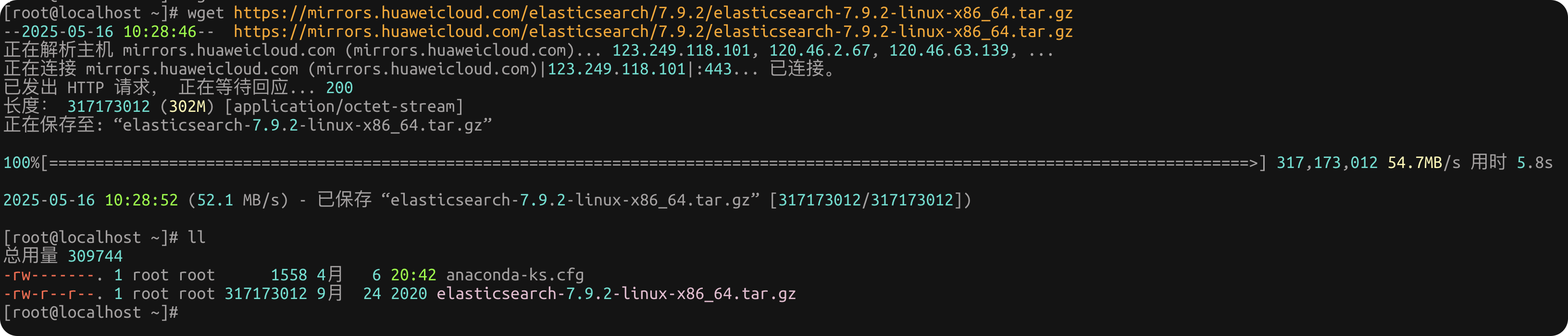

下载 ES

官网:[https://www.elastic.co/cn/](https://www.elastic.co/cn/)

下载地址:[https://mirrors.huaweicloud.com/elasticsearch/7.9.2/elasticsearch-7.9.2-linux-x86_64.tar.gz]

版本对照:[https://www.elastic.co/cn/support/matrix/#matrix_compatibility]

官方下载地址:[https://www.elastic.co/cn/downloads](https://www.elastic.co/cn/downloads)

wget https://mirrors.huaweicloud.com/elasticsearch/7.9.2/elasticsearch-7.9.2-linux-x86_64.tar.gz

解压 es

# 查看下载的压缩包

[root@localhost ~]# ll

总用量 309744

...

-rw-r--r--. 1 root root 317173012 9月 24 2020 elasticsearch-7.9.2-linux-x86_64.tar.gz

# 进行解压到/usr/local指定目录中

[root@localhost ~]# tar xf elasticsearch-7.9.2-linux-x86_64.tar.gz -C /usr/local/解压后最好做软连接,后期升级方便

# 进入/usr/local/目录

[root@localhost ~]# cd /usr/local/

[root@localhost local]# ll

总用量 0

...

drwxr-xr-x. 9 root root 155 1月 25 2023 elasticsearch-7.9.2

...

# 做软连接

[root@localhost local]# ln -s elasticsearch-7.9.2/ es

[root@localhost local]# ll

总用量 0

...

drwxr-xr-x. 9 root root 155 1月 25 2023 elasticsearch-7.9.2

lrwxrwxrwx. 1 root root 20 5月 16 09:20 es -> elasticsearch-7.9.2/

...创建 es 用户

# 创建用户并设置不可登入

useradd --system --no-create-home --shell /sbin/nologin es 参数说明:--system:创建一个系统用户(UID < 1000,一般不用于登录)。

--no-create-home:不创建 home 目录(Elasticsearch 不需要)。

--shell /sbin/nologin:禁止该用户登录系统。

es:用户名

改变属主属组

# 进入/usr/local/

[root@localhost local]# cd /usr/local/

[root@localhost local]# ll

总用量 0

...

drwxr-xr-x. 9 root root 155 9月 23 2020 elasticsearch-7.9.2

lrwxrwxrwx. 1 root root 20 5月 16 10:31 es -> elasticsearch-7.9.2/

...

# 改变elasticsearch-7.9.2目录的属主属组

[root@localhost local]# chown -R es:es elasticsearch-7.9.2/

[root@localhost local]# ll

总用量 0

...

drwxr-xr-x. 9 es es 155 1月 25 2023 elasticsearch-7.9.2

lrwxrwxrwx. 1 root root 20 5月 16 09:20 es -> elasticsearch-7.9.2/

...修改配置文件

进入相关目录

# 进入/usr/local/es/config/目录

[root@localhost elasticsearch-8.6.1]# cd /usr/local/es/config/

[root@localhost config]# ll

总用量 36

-rw-rw----. 1 es es 2831 9月 23 2020 elasticsearch.yml

-rw-rw----. 1 es es 2301 9月 23 2020 jvm.options

drwxr-x---. 2 es es 6 9月 23 2020 jvm.options.d

-rw-rw----. 1 es es 17671 9月 23 2020 log4j2.properties

-rw-rw----. 1 es es 473 9月 23 2020 role_mapping.yml

-rw-rw----. 1 es es 197 9月 23 2020 roles.yml

-rw-rw----. 1 es es 0 9月 23 2020 users

-rw-rw----. 1 es es 0 9月 23 2020 users_roles修改 elasticsearch.yml 配置文件

vim elasticsearch.ymlnode.name: node

path.data: /data/esdata

path.logs: /var/log/elasticsearch

bootstrap.memory_lock: true

network.host: 0.0.0.0

http.port: 9200

discovery.type: single-node

action.destructive_requires_name: true配置文件解读

# ======================== Elasticsearch Configuration =========================

# Elasticsearch配置文件

# 注意:Elasticsearch默认配置对大多数设置都有合理的默认值。

# 在调整和优化配置之前,请确保您了解您想要实现的目标和可能的后果。

#

# 配置节点的主要方式是通过此文件。此模板列出了您可能想要为生产集群配置的最重要设置。

#

# 请参阅文档以获取有关配置选项的更多信息:

# https://www.elastic.co/guide/en/elasticsearch/reference/index.html

#

# ---------------------------------- Cluster -----------------------------------

# 集群配置部分

#

# 为您的集群使用描述性名称:

# 此名称用于标识集群,相同集群名的节点会尝试加入同一集群

# cluster.name: ELK

#

# ------------------------------------ Node ------------------------------------

# 节点配置部分

#

# 为节点使用描述性名称:

# 每个节点应有唯一的名称,用于在集群中识别

node.name: node

#

# 向节点添加自定义属性:

# 可用于自定义节点分组或标记特定用途的节点

#node.attr.rack: r1

#

# ----------------------------------- Paths ------------------------------------

# 路径配置部分

#

# 存储数据的目录路径(多个位置用逗号分隔):

# 指定Elasticsearch存储索引数据的位置

path.data: /data/esdata

#

# 日志文件路径:

# 指定Elasticsearch存储日志文件的位置

path.logs: /var/log/elasticsearch

#

# ----------------------------------- Memory -----------------------------------

# 内存配置部分

#

# 启动时锁定内存:

# 防止Elasticsearch内存被交换到磁盘,提高性能

bootstrap.memory_lock: true

#

# 确保堆大小设置为系统可用内存的约一半,并且允许进程所有者使用此限制。

# 当系统交换内存时,Elasticsearch性能会很差。

#

# ---------------------------------- Network -----------------------------------

# 网络配置部分

#

# 将绑定地址设置为特定IP(IPv4或IPv6):

# 0.0.0.0表示绑定到所有可用网络接口

network.host: 0.0.0.0

#

# 为HTTP设置自定义端口:

# 默认为9200,这是Elasticsearch的REST API端口

http.port: 9200

#

# 有关更多信息,请参阅网络模块文档。

#

# --------------------------------- Discovery ----------------------------------

# 发现配置部分

#

# 传递初始主机列表以在启动此节点时执行发现:

# 默认主机列表为["127.0.0.1", "[::1]"]

# 这里配置的是集群中其他节点的IP地址

# discovery.seed_hosts: ["192.168.149.125","192.168.149.126","192.168.149.127"]

#

# 使用初始的主节点集合引导集群:

# 指定可以成为主节点的节点列表,用于集群初始化

# cluster.initial_master_nodes: ["localhost"]

#

# 如果你确定是单节点环境,也可以设置这个避免选主过程

discovery.type: single-node

#

# 有关更多信息,请参阅发现和集群形成模块文档。

#

# ---------------------------------- Gateway -----------------------------------

# 网关配置部分

#

# 在完全集群重启后,阻止初始恢复,直到N个节点启动:

# 确保至少有指定数量的节点可用后才开始数据恢复,提高数据安全性

# gateway.recover_after_nodes: 2

#

# 有关更多信息,请参阅网关模块文档。

#

# ---------------------------------- Various -----------------------------------

# 其他配置部分

#

# 删除索引时需要显式名称:

# 防止意外删除多个索引,提高操作安全性

action.destructive_requires_name: true修改 jvm.options 配置文件

vim jvm.options 1 ## JVM configuration

2

3 ################################################################

4 ## IMPORTANT: JVM heap size

5 ################################################################

6 ##

7 ## You should always set the min and max JVM heap

8 ## size to the same value. For example, to set

9 ## the heap to 4 GB, set:

10 ##

11 ## -Xms4g

12 ## -Xmx4g

13 ##

14 ## See https://www.elastic.co/guide/en/elasticsearch/reference/current/heap-size.html

15 ## for more information

16 ##

17 ################################################################

18

19 # Xms represents the initial size of total heap space

20 # Xmx represents the maximum size of total heap space

21

22 -Xms4g

23 -Xmx4g创建数据及日志目录

# 创建数据目录

mkdir -p /data/esdata/

# 创建日志目录

mkdir /var/log/elasticsearch改变属主属组

chown -R es:es /data/esdata/先前台启动看看是否报错

sudo -u es /usr/local/es/bin/elasticsearch后台启动

sudo -u es /usr/local/es/bin/elasticsearch -d通过查看端口验证服务

[root@localhost logs]# ss -tnl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

...

LISTEN 0 128 [::]:9200 [::]:*

LISTEN 0 128 [::]:9300 [::]:*

...通过访问 url验证服务

[root@localhost logs]# curl localhost:9200

{

"name" : "node", # 节点名

"cluster_name" : "elasticsearch", # 集群名

"cluster_uuid" : "Kc7g6qybRNOi5yXVAEp04g", # 集群uuid

"version" : {

"number" : "7.9.2", # es版本

"build_flavor" : "default",

"build_type" : "tar",

"build_hash" : "d34da0ea4a966c4e49417f2da2f244e3e97b4e6e",

"build_date" : "2020-09-23T00:45:33.626720Z",

"build_snapshot" : false,

"lucene_version" : "8.6.2",

"minimum_wire_compatibility_version" : "6.8.0", # lucene版本号,es给予lucene做搜索

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search" # 口号:你知道,为了搜索

}Kibana

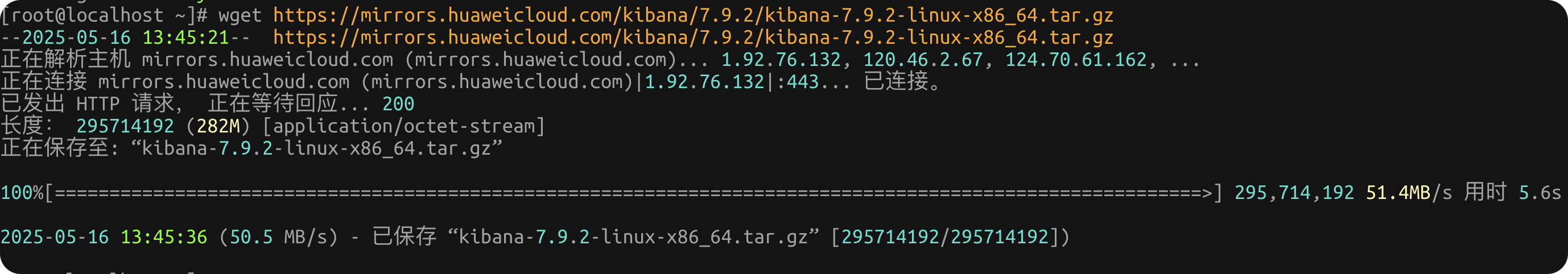

下载 kibana

下载地址:https://mirrors.huaweicloud.com/kibana/7.9.2/kibana-7.9.2-linux-x86_64.tar.gz

版本对照:https://www.elastic.co/cn/support/matrix/#matrix_compatibility

官网下载地址: https://www.elastic.co/downloads/kibana

wget https://mirrors.huaweicloud.com/kibana/7.9.2/kibana-7.9.2-linux-x86_64.tar.gz

解压 kibana

# 查看下载的压缩包

[root@localhost ~]# ll

总用量 288788

...

-rw-r--r--. 1 root root 295714192 9月 24 2020 kibana-7.9.2-linux-x86_64.tar.gz

# 进行解压到/usr/local指定目录中

[root@localhost ~]# tar xf kibana-7.9.2-linux-x86_64.tar.gz -C /usr/local/解压后最好做软连接,后期升级方便

# 进入/usr/local/目录

[root@localhost ~]# cd /usr/local

[root@localhost local]# ll

总用量 0

...

drwxr-xr-x. 13 root root 266 5月 16 13:48 kibana-7.9.2-linux-x86_64

...

# 做软连接

[root@localhost local]# ln -s kibana-7.9.2-linux-x86_64/ kibana

[root@localhost local]# ll

总用量 0

...

drwxr-xr-x. 13 root root 266 5月 16 13:48 kibana-7.9.2-linux-x86_64

lrwxrwxrwx. 1 root root 26 5月 16 13:49 kibana -> kibana-7.9.2-linux-x86_64/

...修改配置文件

进入相关目录

# 进入/usr/local/es/config/目录

[root@localhost ~]# cd /usr/local/kibana/config/

[root@localhost config]# ll

总用量 12

-rw-r--r--. 1 root root 5259 9月 23 2020 kibana.yml

-rw-r--r--. 1 root root 216 9月 23 2020 node.options修改 kibana.yml 配置文件

2:server.port: 5601 #监听端口

7:server.host: "0.0.0.0" #监听地址

28:elasticsearch.hosts: ["http://192.168.56.112:9200"]

115:i18n.locale: "zh-CN" #支持中文先前台启动看看是否报错

# Kibana不应该作为root运行。使用——allow-root继续。

[root@localhost bin]# ./kibana

Kibana should not be run as root. Use --allow-root to continue.

# 使用root启动

[root@localhost bin]# ./kibana --allow-root启动会报错(不能是错误,是插件问题)

{ Error: socket hang up

at createHangUpError (_http_client.js:332:15)

at Socket.socketOnEnd (_http_client.js:435:23)

at Socket.emit (events.js:203:15)

at endReadableNT (_stream_readable.js:1145:12)

at process._tickCallback (internal/process/next_tick.js:63:19) code: 'ECONNRESET' } }

log [06:03:57.700] [error][plugins][reporting][validations] Error: Could not close browser client handle!

at browserFactory.test.then.browser (/usr/local/kibana-7.9.2-linux-x86_64/x-pack/plugins/reporting/server/lib/validate/validate_browser.js:26:15)

at process._tickCallback (internal/process/next_tick.js:68:7)

log [06:03:57.715] [warning][plugins][reporting][validations] Reporting 插件自检生成警告:Error: Could not close browser client handle!需要安装一些包

sudo yum install -y \

fontconfig \

freetype \

libjpeg \

libpng \

cups-libs \

libXcomposite \

libXcursor \

libXdamage \

libXext \

libXi \

libXtst \

pango \

alsa-lib \

xorg-x11-fonts-Type1 \

xorg-x11-fonts-75dpi \

xorg-x11-utils \

xorg-x11-fonts-misc写成系统服务

vim /etc/systemd/system/kibana.service[Unit]

Description=Kibana

After=network.target

[Service]

Type=simple

User=root

Group=root

ExecStart=/usr/local/kibana/bin/kibana --allow-root

Restart=always

RestartSec=10

WorkingDirectory=/usr/local/kibana

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target加载服务

systemctl daemon-reload启动服务

systemctl start kibana设置开机启动

systemctl enable kibana验证服务

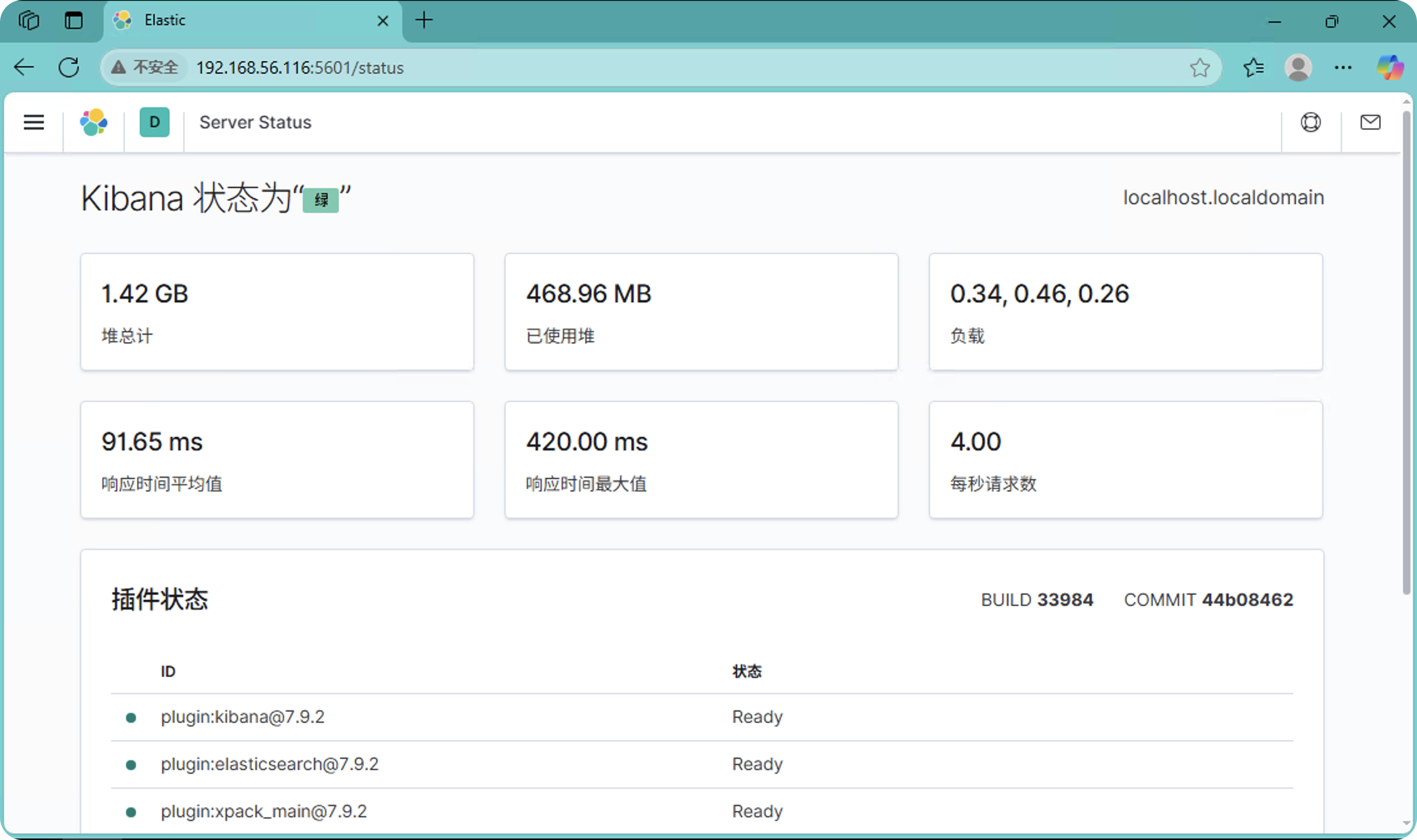

通过查看端口

[root@localhost kibana]# ss -tnl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:5601 *:*

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 100 [::1]:25 [::]:*浏览器访问查看状态

http://192.168.56.116:5601/status

Logsta

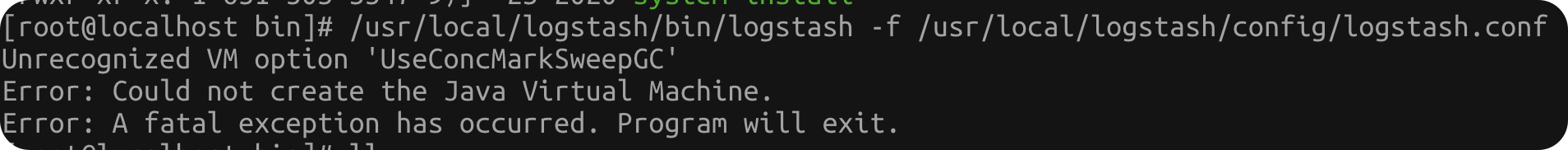

jdk

上传 jdk8,别太高版本,不然不适配

这个 UseConcMarkSweepGC 是 旧版 Java(最多支持到 Java 8) 的垃圾回收器选项。

解压

tar xf jdk-8u161-linux-x64.tar.gz -C /usr/local修改文件名

mv /usr/local/jdk1.8.0_161 /usr/local/jdk修改属主属组

chown -R root:root /usr/local/jdk/配置环境变量

vim /etc/profile

# 最后增加一下内容

export JAVA_HOME=/usr/local/jdk

export JRE_HOME=/usr/local/jdk/jre

export PATH=$JAVA_HOME/bin:$PATH更新变量

source /etc/profile验证

java -version

[root@localhost jdk]# java -version

openjdk version "15" 2020-09-15

OpenJDK Runtime Environment AdoptOpenJDK (build 15+36)

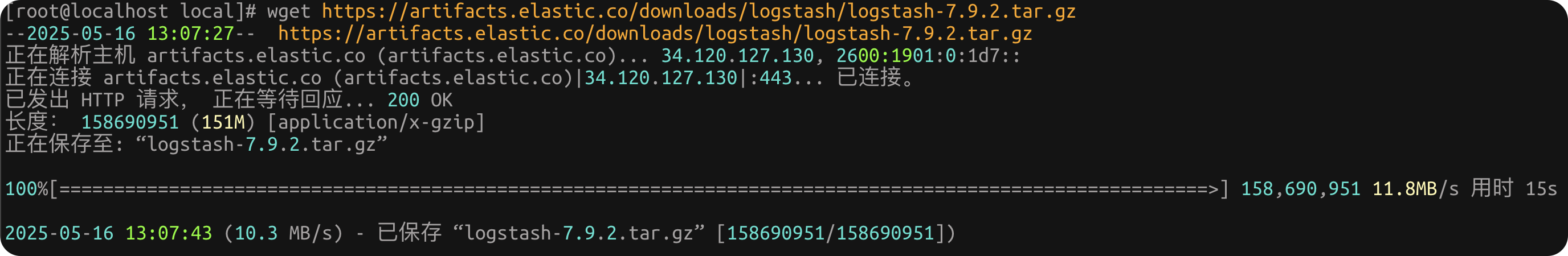

OpenJDK 64-Bit Server VM AdoptOpenJDK (build 15+36, mixed mode, sharing)下载 logstash

下载地址:https://artifacts.elastic.co/downloads/logstash/logstash-7.9.2.tar.gz

版本对照:https://www.elastic.co/cn/support/matrix/#matrix_compatibility

官方下载地址:https://www.elastic.co/cn/downloads

# 执行命令下载

[root@localhost ~]# wget https://artifacts.elastic.co/downloads/logstash/logstash-7.9.2.tar.gz

解压 logstash

# 查看下载的压缩包

[root@localhost ~]# ll

总用量 154976

-rw-------. 1 root root 1558 4月 6 20:42 anaconda-ks.cfg

-rw-r--r--. 1 root root 158690951 9月 24 2020 logstash-7.9.2.tar.gz

# 进行解压到/usr/local指定目录中

[root@localhost ~]# tar xf logstash-7.9.2.tar.gz -C /usr/local/解压后最好做软连接,后期升级方便

# 进入/usr/local/目录

[root@localhost ~]# cd /usr/local/

[root@localhost local]# ll

总用量 0

...

drwxr-xr-x. 12 631 503 255 9月 23 2020 logstash-7.9.2

...

# 授权

[root@localhost local]# chown -R root:root logstash-7.9.2/

# 做软连接

[root@localhost local]# ln -s logstash-7.9.2/ logstash

[root@localhost local]# ll

总用量 0

...

lrwxrwxrwx. 1 root root 15 5月 16 11:28 logstash -> logstash-7.9.2/

drwxr-xr-x. 12 631 503 255 9月 23 2020 logstash-7.9.2

...目录结构说明

/usr/local/logstash

├── bin/ # 启动脚本

├── config/ # 默认配置文件目录

├── data/ # 数据缓存

├── logstash.conf # 推荐你放这里或外部

├── logs/ # 日志输出

├── modules/ # 模块

└── vendor/ # 插件依赖修改配置文件

进入相关目录

# 进入/usr/local/logstash/config/目录

[root@localhost ~]# cd /usr/local/logstash/config/

[root@localhost config]# ll

总用量 40

-rw-r--r--. 1 631 503 2019 9月 23 2020 jvm.options

-rw-r--r--. 1 631 503 9097 9月 23 2020 log4j2.properties

-rw-r--r--. 1 631 503 342 9月 23 2020 logstash-sample.conf # 模版

-rw-r--r--. 1 631 503 10661 9月 23 2020 logstash.yml

-rw-r--r--. 1 631 503 3146 9月 23 2020 pipelines.yml

-rw-r--r--. 1 631 503 1696 9月 23 2020 startup.options修改 logstash.conf 配置文件

Logstash 启动需要一个输入输出配置文件:

vim logstash.confinput {

file {

path => "/var/log/messages"

start_position => "beginning" # 表示从文件开头开始读取(适合测试);默认是 "end"(只读新增的部分)

sincedb_path => "/dev/null" # 每次启动都从头读,测试用,生产建议去掉

}

}

output {

file {

path => "/root/messagelogs.%{+yyyy.MM.dd}"

codec => line { format => "%{message}" }

}

}

input {

file {

path => "/var/log/messages"

start_position => "beginning" # 表示从文件开头开始读取(适合测试);默认是 "end"(只读新增的部分)

sincedb_path => "/dev/null" # 每次启动都从头读,测试用,生产建议去掉

}

}

output {

elasticsearch { # 输出到elasticsearch

hosts => ["http://192.168.56.112:9200"] # elasticsearch地址

index => "systemlog-%{+YYYY.MM.dd}" # 索引名称

}

}先前台启动看看是否报错

/usr/local/logstash/bin/logstash -f /usr/local/logstash/config/logstash.conf写成系统服务

vim /etc/systemd/system/logstash.service[Unit]

Description=Logstash

After=network.target

[Service]

Type=simple

User=root

Group=root

ExecStart=/usr/local/logstash/bin/logstash -f /usr/local/logstash/config/logstash.conf --path.settings /usr/local/logstash/config

Restart=always

LimitNOFILE=65536

Environment="JAVA_HOME=/usr/local/jdk"

[Install]

WantedBy=multi-user.target加载服务

systemctl daemon-reload启动服务

systemctl start logstash设置开机启动

systemctl enable logstash验证服务

通过查看端口

[root@localhost jdk]# ss -tnl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 100 [::1]:25 [::]:*

LISTEN 0 50 [::ffff:127.0.0.1]:9600 [::]:* elasticsearch 服务器上验证收到数据

[root@localhost ~]# cd /data/esdata/nodes/0/indices/

[root@localhost indices]# ll

总用量 0

drwxr-xr-x. 4 es es 29 5月 16 13:19 mTosiycDQ7iPfndDuBXrug

# 通过索引可以看到uuid和文件名一样

[root@localhost indices]# curl -X GET http://localhost:9200/_cat/indices?v

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

yellow open system-logs-2025.05.16 mTosiycDQ7iPfndDuBXrug 1 1 9783 0 1.6mb 1.6mbk8s方式

此方式存储使用NFS方式,如使用别的请自行更改

创建存放目录

mkdir -p /home/elk/{01.es,02.kibana,03.logstash}创建名称空间

vim /home/elk/00.elk_namespace.yamlkind: Namespace

apiVersion: v1

metadata:

name: logging

labels:

environment: dev

version: v1

component: namespaceES

创建PV

vim /home/elk/01.es/00.elasticsearch-pv.yamlapiVersion: v1

kind: PersistentVolume

metadata:

name: data-elasticsearch-0

labels:

app: elasticsearch

environment: dev

version: v1

component: pv

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: efk-sc

nfs:

path: "/home/elk00"

server: 192.168.56.42

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: data-elasticsearch-1

labels:

app: elasticsearch

environment: dev

version: v1

component: pv

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: efk-sc

nfs:

path: "/home/elk01"

server: 192.168.56.42

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: data-elasticsearch-2

labels:

app: elasticsearch

environment: dev

version: v1

component: pv

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: efk-sc

nfs:

path: "/home/elk02"

server: 192.168.56.42创建svc

vim /home/elk/01.es/01.elasticsearch-svc.yamlkind: Service

apiVersion: v1

metadata:

name: elasticsearch

namespace: logging

labels:

app: elasticsearch

environment: dev

version: v1

component: svc

spec:

selector:

app: elasticsearch

clusterIP: None

ports:

- port: 9200

name: rest

- port: 9300

name: inter-node

---

kind: Service

apiVersion: v1

metadata:

name: elasticsearch-nodepod

namespace: logging

labels:

app: elasticsearch

environment: dev

version: v1

component: svc

spec:

type: NodePort

selector:

app: elasticsearch

ports:

- name: es

port: 9200

targetPort: 9200

nodePort: 30920创建StatefulSet

vim /home/elk/01.es/02.elasticsearch-statefulset.yamlkind: StatefulSet

apiVersion: apps/v1

metadata:

name: elasticsearch

namespace: logging

spec:

serviceName: elasticsearch

replicas: 3

selector:

matchLabels:

app: elasticsearch

environment: dev

version: v1

component: statefulset

template:

metadata:

labels:

app: elasticsearch

spec:

initContainers:

- name: increase-vm-max-map

image: busybox

imagePullPolicy: "IfNotPresent"

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox

imagePullPolicy: "IfNotPresent"

securityContext:

privileged: true

command: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"]

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

- name: install-ik

image: elasticsearch:7.17.3

command:

- sh

- -c

- |

elasticsearch-plugin install --batch https://get.infini.cloud/elasticsearch/analysis-ik/7.17.3

volumeMounts:

- name: plugins

mountPath: /usr/share/elasticsearch/plugins

containers:

- name: elasticsearch

image: zzwen.cn:8002/bosai/elasticsearch:7.17.3

imagePullPolicy: "IfNotPresent"

ports:

- name: rest

containerPort: 9200

- name: inter

containerPort: 9300

resources:

limits:

cpu: 1000m

requests:

cpu: 1000m

volumeMounts:

- mountPath: /usr/share/elasticsearch/data

name: data

- name: plugins

mountPath: /usr/share/elasticsearch/plugins

env:

- name: cluster.name

value: k8s-logs

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: cluster.initial_master_nodes

value: "elasticsearch-0,elasticsearch-1,elasticsearch-2"

- name: discovery.zen.minimum_master_nodes

value: "2"

- name: discovery.seed_hosts

value: "elasticsearch"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m"

- name: network.host

value: "0.0.0.0"

volumes:

- name: plugins

emptyDir: {}

volumeClaimTemplates:

- metadata:

name: data

labels:

app: elasticsearch

environment: dev

version: v1

component: statefulset

spec:

accessModes:

- ReadWriteOnce

storageClassName: efk-sc

resources:

requests:

storage: 50GiKibana

创建configmap

vim /home/elk/02.kibana/00.kibana-configmap.yamlkind: ConfigMap

apiVersion: v1

metadata:

name: kibana-config

namespace: logging

labels:

app: kibana

environment: dev

version: v1

component: configmap

data:

kibana.yml: |

server.name: kibana

server.host: "0.0.0.0"

i18n.locale: zh-CN #设置默认语言为中文

elasticsearch:

hosts: ${ELASTICSEARCH_HOSTS} #es 集群连接地址创建svc

vim /home/elk/02.kibana/01.kibana-service.yamlkind: Service

apiVersion: v1

metadata:

name: kibana

namespace: logging

labels:

app: kibana

environment: dev

version: v1

component: service

spec:

ports:

- port: 5601

type: NodePort

selector:

app: kibana创建Deployment

vim /home/elk/02.kibana/02.kibana-deployment.yamlkind: Deployment

apiVersion: apps/v1

metadata:

name: kibana

namespace: logging

labels:

app: kibana

spec:

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

nodeSelector:

app: logging

containers:

- name: kibana

image: zzwen.cn:8002/bosai/kibana:7.17.3

imagePullPolicy: "IfNotPresent"

resources:

limits:

cpu: 1000m

memory: 3Gi

requests:

cpu: 1000m

memory: 3Gi

env:

- name: ELASTICSEARCH_URL

value: http://elasticsearch.logging.svc.cluster.local:9200 #设置为 es 的 handless service 的名称

- name: ELASTICSEARCH_HOSTS

value: http://elasticsearch.logging.svc.cluster.local:9200 #设置为 es 的 handless service 的名称

ports:

- containerPort: 5601

name: kibana

volumeMounts:

- name: config

mountPath: /usr/share/kibana/config/kibana.yml #kibana 配置文件挂载地址

readOnly: true

subPath: kibana.ym

volumes:

- name: config # 跟上面的 volumeMounts 名称匹配

configMap:

name: kibana-config #对应 configmap 名称

Logstash

创建svc

vim /home/elk/03.logstash/00.logstash-service.yamlapiVersion: v1

kind: Service

metadata:

name: logstash

namespace: logging

labels:

app: logstash

component: logstash

environment: dev

version: v1

spec:

ports:

- port: 5044

targetPort: 5044

nodePort: 30004

name: logstash

selector:

app: logstash

type: NodePort创建configmap

vim /home/elk/03.logstash/01.logstash-configmap.yamlapiVersion: v1

kind: ConfigMap

metadata:

name: logstash-pipeline

namespace: elk

data:

logstash.conf: |

input {

beats {

port => 5044

}

}

output {

elasticsearch {

hosts => ["http://elasticsearch:9200"]

index => "logstash-%{+YYYY.MM.dd}"

}

}创建deployment

vim /home/elk/03.logstash/02.logstash-deployment.yamlapiVersion: apps/v1

kind: Deployment

metadata:

name: logstash

namespace: logging

labels:

app: logstash

component: logstash

environment: dev

version: v1

spec:

replicas: 1

selector:

matchLabels:

app: logstash

template:

metadata:

labels:

app: logstash

spec:

containers:

- name: logstash

image: zzwen.cn:8002/bosai/logstash:7.17.3

imagePullPolicy: "IfNotPresent"

ports:

- containerPort: 5044

volumeMounts:

- name: logstash-pipeline

mountPath: /usr/share/logstash/pipeline

resources:

limits:

cpu: 200m

memory: 200Mi

requests:

cpu: 200m

memory: 200Mi

volumes:

- name: logstash-pipeline

configMap:

name: logstash-pipeline二进制集群方式部署es

下载 es

下载地址:https://mirrors.huaweicloud.com/elasticsearch/7.9.2/elasticsearch-7.9.2-linux-x86_64.tar.gz

版本对照:https://www.elastic.co/cn/support/matrix/#matrix_compatibility

官方下载地址:https://www.elastic.co/cn/downloads

# 执行命令下载

[root@localhost ~]# wget https://mirrors.huaweicloud.com/elasticsearch/7.9.2/elasticsearch-7.9.2-linux-x86_64.tar.gz

解压 es

# 查看下载的压软连接,后期升级方便

# 进入/usr/local/目录

[root@localhost ~]# cd /usr/local/

[root@localhost local]# ll

总用量 0

drwxr-xr-x. 2 root root 6 4月 11 2018 bin

drwxr-xr-x. 9 root root 155 1月 25 2023 elasticsearch-7.9.2

drwxr-xr-x. 2 root root 6 4月 11 2018 etc

drwxr-xr-x. 2 root root 6 4月 11 2018 games

drwxr-xr-x. 2 root root 6 4月 11 2018 include

drwxr-xr-x. 2 root root 6 4月 11 2018 lib

drwxr-xr-x. 2 root root 6 4月 11 2018 lib64

drwxr-xr-x. 2 root root 6 4月 11 2018 libexec

drwxr-xr-x. 2 root root 6 4月 11 2018 sbin

drwxr-xr-x. 5 root root 49 4月 6 20:34 share

drwxr-xr-x. 2 root root 6 4月 11 2018 src

# 做软连接

[root@localhost local]# ln -s elasticsearch-7.9.2/ es

[root@localhost local]# ll

总用量 0

drwxr-xr-x. 2 root root 6 4月 11 2018 bin

drwxr-xr-x. 9 root root 155 1月 25 2023 elasticsearch-7.9.2

lrwxrwxrwx. 1 root root 20 5月 16 09:20 es -> elasticsearch-7.9.2/

drwxr-xr-x. 2 root root 6 4月 11 2018 etc

drwxr-xr-x. 2 root root 6 4月 11 2018 games

drwxr-xr-x. 2 root root 6 4月 11 2018 include

drwxr-xr-x. 2 root root 6 4月 11 2018 lib

drwxr-xr-x. 2 root root 6 4月 11 2018 lib64

drwxr-xr-x. 2 root root 6 4月 11 2018 libexec

drwxr-xr-x. 2 root root 6 4月 11 2018 sbin

drwxr-xr-x. 5 root root 49 4月 6 20:34 share

drwxr-xr-x. 2 root root 6 4月 11 2018 src创建 es 用户

# 创建用户并设置不可登入

useradd --system --no-create-home --shell /sbin/nologin es 参数说明:--system:创建一个系统用户(UID < 1000,一般不用于登录)。

--no-create-home:不创建 home 目录(Elasticsearch 不需要)。

--shell /sbin/nologin:禁止该用户登录系统。

es:用户名

改变属主属组

# 进入/usr/local/

[root@localhost local]# cd /usr/local/

[root@localhost local]# ll

总用量 0

drwxr-xr-x. 2 root root 6 4月 11 2018 bin

drwxr-xr-x. 9 root root 155 9月 23 2020 elasticsearch-7.9.2

lrwxrwxrwx. 1 root root 20 5月 16 10:31 es -> elasticsearch-7.9.2/

drwxr-xr-x. 2 root root 6 4月 11 2018 etc

drwxr-xr-x. 2 root root 6 4月 11 2018 games

drwxr-xr-x. 2 root root 6 4月 11 2018 include

drwxr-xr-x. 2 root root 6 4月 11 2018 lib

drwxr-xr-x. 2 root root 6 4月 11 2018 lib64

drwxr-xr-x. 2 root root 6 4月 11 2018 libexec

drwxr-xr-x. 2 root root 6 4月 11 2018 sbin

drwxr-xr-x. 5 root root 49 4月 6 20:34 share

drwxr-xr-x. 2 root root 6 4月 11 2018 src

# 改变elasticsearch-7.9.2目录的属主属组

[root@localhost local]# chown -R es:es elasticsearch-7.9.2/

[root@localhost local]# ll

总用量 0

drwxr-xr-x. 2 root root 6 4月 11 2018 bin

drwxr-xr-x. 9 es es 155 1月 25 2023 elasticsearch-7.9.2

lrwxrwxrwx. 1 root root 20 5月 16 09:20 es -> elasticsearch-7.9.2/

drwxr-xr-x. 2 root root 6 4月 11 2018 etc

drwxr-xr-x. 2 root root 6 4月 11 2018 games

drwxr-xr-x. 2 root root 6 4月 11 2018 include

drwxr-xr-x. 2 root root 6 4月 11 2018 lib

drwxr-xr-x. 2 root root 6 4月 11 2018 lib64

drwxr-xr-x. 2 root root 6 4月 11 2018 libexec

drwxr-xr-x. 2 root root 6 4月 11 2018 sbin

drwxr-xr-x. 5 root root 49 4月 6 20:34 share

drwxr-xr-x. 2 root root 6 4月 11 2018 src修改配置文件

进入相关目录

# 进入/usr/local/es/config/目录

[root@localhost elasticsearch-8.6.1]# cd /usr/local/es/config/

[root@localhost config]# ll

总用量 36

-rw-rw----. 1 es es 2831 9月 23 2020 elasticsearch.yml

-rw-rw----. 1 es es 2301 9月 23 2020 jvm.options

drwxr-x---. 2 es es 6 9月 23 2020 jvm.options.d

-rw-rw----. 1 es es 17671 9月 23 2020 log4j2.properties

-rw-rw----. 1 es es 473 9月 23 2020 role_mapping.yml

-rw-rw----. 1 es es 197 9月 23 2020 roles.yml

-rw-rw----. 1 es es 0 9月 23 2020 users

-rw-rw----. 1 es es 0 9月 23 2020 users_roles修改 elasticsearch.yml 配置文件

node01 节点

vim elasticsearch.yml

cluster.name: my-es-cluster

node.name: node-1

path.data: /data/esdata # ES 数据保存目录

path.logs: /var/log/elasticsearch # ES 日志保存目

node.roles: [ master, data, ingest ]

network.host: 0.0.0.0

http.port: 9200

discovery.seed_hosts: ["192.168.56.112", "192.168.56.113", "192.168.56.114"]

cluster.initial_master_nodes: ["node-1", "node-2", "node-3"]node02 节点

vim elasticsearch.yml

cluster.name: my-es-cluster

node.name: node-2

path.data: /data/esdata # ES 数据保存目录

path.logs: /var/log/elasticsearch # ES 日志保存目

node.roles: [ master, data, ingest ]

network.host: 0.0.0.0

http.port: 9200

discovery.seed_hosts: ["192.168.56.112", "192.168.56.113", "192.168.56.114"]

cluster.initial_master_nodes: ["node-1", "node-2", "node-3"]node03 节点

vim elasticsearch.yml

cluster.name: my-es-cluster

node.name: node-3

path.data: /data/esdata # ES 数据保存目录

path.logs: /var/log/elasticsearch # ES 日志保存目

node.roles: [ master, data, ingest ]

network.host: 0.0.0.0

http.port: 9200

discovery.seed_hosts: ["192.168.56.112", "192.168.56.113", "192.168.56.114"]

cluster.initial_master_nodes: ["node-1", "node-2", "node-3"]修改 jvm.options 配置文件

vim jvm.options

1 ## JVM configuration

2

3 ################################################################

4 ## IMPORTANT: JVM heap size

5 ################################################################

6 ##

7 ## You should always set the min and max JVM heap

8 ## size to the same value. For example, to set

9 ## the heap to 4 GB, set:

10 ##

11 ## -Xms4g

12 ## -Xmx4g

13 ##

14 ## See https://www.elastic.co/guide/en/elasticsearch/reference/current/heap-size.html

15 ## for more information

16 ##

17 ################################################################

18

19 # Xms represents the initial size of total heap space

20 # Xmx represents the maximum size of total heap space

21

22 -Xms4g

23 -Xmx4g创建数据及日志目录

# 创建数据目录

mkdir -p /data/esdata/

# 创建日志目录

mkdir /var/log/elasticsearch改变属主属组

chown -R es:es /data/esdata/

chown -R es:es /var/log/elasticsearch修改内核参数

echo "vm.max_map_count=262144" >> /etc/sysctl.conf

sysctl -p设置文件描述符限制

echo "es soft nofile 65535" >> /etc/security/limits.conf

echo "es hard nofile 65535" >> /etc/security/limits.conf先前台启动看看是否报错

sudo -u es /usr/local/es/bin/elasticsearch后台启动

sudo -u es /usr/local/es/bin/elasticsearch -d验证服务

通过查看端口

[root@localhost logs]# ss -tnl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 128 *:22 *:*

LISTEN 0 100 [::1]:25 [::]:*

LISTEN 0 128 [::]:9200 [::]:*

LISTEN 0 128 [::]:9300 [::]:*

LISTEN 0 128 [::]:22 [::]:*通过访问 url

[root@localhost logs]# curl localhost:9200

{

"name" : "node", # 节点名

"cluster_name" : "elasticsearch", # 集群名

"cluster_uuid" : "Kc7g6qybRNOi5yXVAEp04g", # 集群uuid

"version" : {

"number" : "7.9.2", # es版本

"build_flavor" : "default",

"build_type" : "tar",

"build_hash" : "d34da0ea4a966c4e49417f2da2f244e3e97b4e6e",

"build_date" : "2020-09-23T00:45:33.626720Z",

"build_snapshot" : false,

"lucene_version" : "8.6.2",

"minimum_wire_compatibility_version" : "6.8.0", # lucene版本号,es给予lucene做搜索

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search" # 口号:你知道,为了搜索

}通过 url 查看集群内的所有主机

curl -XGET "http://<任意一个节点IP>:9200/_cat/nodes?v"

[root@localhost elasticsearch]# curl -XGET "http://192.168.56.113:9200/_cat/nodes?v"

ip heap.percent ram.percent cpu load_1m load_5m load_15m node.role master name

192.168.56.112 8 78 2 0.48 0.35 0.28 dim - node-1

192.168.56.114 16 79 3 0.09 0.45 0.38 dim * node-3

192.168.56.113 14 79 2 0.43 0.45 0.32 dim - node-2只查看节点数量

curl -XGET "http://<任意节点IP>:9200/_cluster/health?pretty"查看每个节点的详细信息(带角色、IP、端口等)

curl -XGET "http://<任意节点IP>:9200/_nodes?pretty"Logstash 正确配置方式示例:

output {

elasticsearch {

hosts => ["http://192.168.56.112:9200", "http://192.168.56.113:9200", "http://192.168.56.114:9200"]

index => "your-index-name-%{+YYYY.MM.dd}"

}

} kibana 正确配置方式示例

# Kibana 监听的 IP 和端口

server.host: "0.0.0.0"

server.port: 5601

# Elasticsearch 集群地址(写多个节点是冗余,不是负载均衡)

elasticsearch.hosts:

- "http://192.168.56.112:9200"

- "http://192.168.56.113:9200"

- "http://192.168.56.114:9200"

# 认证信息(连接 Elasticsearch)

# elasticsearch.username: "kibana_system"

# elasticsearch.password: "your_password"

# 如果你启用了 HTTPS(可选)

# elasticsearch.hosts: ["https://192.168.56.112:9200"]

# elasticsearch.ssl.verificationMode: "none"

# Kibana 自身的服务标识

server.name: "kibana-node1"

# 设置Kibana索引空间(可选)

kibana.index: ".kibana"

# 如果使用 TLS/SSL,也可以添加以下配置:

# elasticsearch.ssl.certificateAuthorities: [ "/path/to/ca.crt" ]EFK

创建存放目录

mkdir -p /home/efk/{01.es,02.kibana,03.fluentd}创建名称空间

vim /home/elk/00.efk_namespace.yamlkind: Namespace

apiVersion: v1

metadata:

name: logging-efk

labels:

app: efk

environment: dev

version: v1

component: namespaceES

创建 pv

vim /home/efk/01.es/00.elasticsearch-pv.yamlapiVersion: v1

kind: PersistentVolume

metadata:

name: data-elasticsearch-0

labels:

app: elasticsearch

environment: dev

version: v1

component: pv

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain # 保留策略,当pvc删除的时候,pv不会被删除

storageClassName: efk-sc # 使用标准存储类,用于 PV 和 PVC 的自动匹配

nfs:

path: "/home/elk00"

server: 192.168.56.42

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: data-elasticsearch-1

labels:

app: elasticsearch

environment: dev

version: v1

component: pv

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain # 保留策略,当pvc删除的时候,pv不会被删除

storageClassName: efk-sc # 使用标准存储类,用于 PV 和 PVC 的自动匹配

nfs:

path: "/home/elk01"

server: 192.168.56.42

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: data-elasticsearch-2

labels:

app: elasticsearch

environment: dev

version: v1

component: pv

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain # 保留策略,当pvc删除的时候,pv不会被删除

storageClassName: efk-sc # 使用标准存储类,用于 PV 和 PVC 的自动匹配

nfs:

path: "/home/elk02"

server: 192.168.56.42创建svc

vim /home/efk/01.es/01.elasticsearch-svc.yamlkind: Service

apiVersion: v1

metadata:

name: elasticsearch

namespace: logging-efk

labels:

app: elasticsearch

environment: dev

version: v1

component: svc

spec:

selector:

app: elasticsearch

clusterIP: None

ports:

- port: 9200

name: rest

- port: 9300

name: inter-node创建 StatefulSet

vim /home/efk/01.es/02.elasticsearch-statefulset.yamlkind: StatefulSet

apiVersion: apps/v1 # 使用的 Kubernetes API 版本

metadata:

name: elasticsearch # 资源名称

namespace: logging-efk # 部署到 logging 命名空间

labels:

app: elasticsearch

environment: dev

version: v1

component: statefulset

spec:

serviceName: elasticsearch # 关联的 Headless Service 名称,用于网络标识

replicas: 3 # 集群节点数量,保持奇数以实现高可用

selector: # 标签选择器,匹配管理的 Pod

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch # Pod 标签,需与 selector 匹配

spec:

# 初始化容器配置

initContainers:

- name: increase-vm-max-map

image: zzwen.cn:8002/bosai/busybox:latest

imagePullPolicy: "IfNotPresent"

command: ["sysctl", "-w", "vm.max_map_count=262144"] # 调整系统参数,ES 需要较大虚拟内存

securityContext:

privileged: true # 需要特权模式执行系统级配置

- name: increase-fd-ulimit

image: zzwen.cn:8002/bosai/busybox:latest

imagePullPolicy: "IfNotPresent"

securityContext:

privileged: true # 需要特权模式修改文件权限

command: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"] # 设置数据目录权限

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data # 挂载持久化存储

- name: install-ik

image: zzwen.cn:8002/bosai/elasticsearch:7.17.3

command:

- sh

- -c

- |

elasticsearch-plugin install --batch https://get.infini.cloud/elasticsearch/analysis-ik/7.17.3

volumeMounts:

- name: plugins

mountPath: /usr/share/elasticsearch/plugins

containers:

- name: elasticsearch

image: zzwen.cn:8002/bosai/elasticsearch:7.17.3

imagePullPolicy: "IfNotPresent" # 镜像拉取策略

# 容器端口配置

ports:

- name: rest # REST API 端口

containerPort: 9200

- name: inter # 节点间通信端口

containerPort: 9300

resources:

limits:

cpu: 1000m # CPU 资源限制(1核)

memory: 1Gi # 内存资源限制

requests:

cpu: 1000m # CPU 资源请求

memory: 1Gi # 内存资源请求s

volumeMounts:

- mountPath: /usr/share/elasticsearch/data # 数据存储路径

name: data

- name: plugins

mountPath: /usr/share/elasticsearch/plugins

env:

- name: cluster.name

value: k8s-logs # 集群名称,需所有节点一致

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name # 使用 Pod 名称作为节点名称

- name: cluster.initial_master_nodes

value: "elasticsearch-0,elasticsearch-1,elasticsearch-2" # 初始主节点列表(StatefulSet 命名规则)

- name: discovery.zen.minimum_master_nodes

value: "2" # 防止脑裂的最小主节点数,应设为 (节点数/2)+1

- name: discovery.seed_hosts

value: "elasticsearch" # 通过 Kubernetes Service 发现节点

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m" # JVM 堆内存设置

- name: network.host

value: "0.0.0.0"

volumes:

- name: plugins

emptyDir: {}

# 持久化存储配置模板

volumeClaimTemplates:

- metadata:

name: data

labels:

app: elasticsearch

environment: dev

version: v1

component: statefulset

spec:

accessModes:

- ReadWriteOnce # 单节点读写模式

storageClassName: efk-sc # 存储类名称

resources:

requests:

storage: 50Gi # 每个 Pod 的存储空间Kibana

创建configmap

vim /home/efk/02.kibana/00.kibana-configmap.yamlkind: ConfigMap

apiVersion: v1

metadata:

name: kibana-config

namespace: logging-efk

labels:

app: kibana

environment: dev

version: v1

component: configmap

data:

kibana.yml: |

server.name: kibana

server.host: "0.0.0.0"

i18n.locale: zh-CN #设置默认语言为中文

elasticsearch:

hosts: ${ELASTICSEARCH_HOSTS} #es 集群连接地址创建svc

vim /home/efk/02.kibana/01.kibana-service.yamlkind: Service

apiVersion: v1

metadata:

name: kibana

namespace: logging-efk

labels:

app: kibana

environment: dev

version: v1

component: service

spec:

ports:

- port: 5601

type: NodePort

selector:

app: kibana创建Deployment

vim /home/efk/02.kibana/02.kibana-deployment.yamlkind: Deployment

apiVersion: apps/v1

metadata:

name: kibana

namespace: logging-efk

labels:

app: kibana

environment: dev

version: v1

component: deplpyment

spec:

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

containers:

- name: kibana

image: zzwen.cn:8002/bosai/kibana:7.17.3

imagePullPolicy: "IfNotPresent"

resources:

limits:

cpu: 1000m

memory: 2Gi

requests:

cpu: 1000m

memory: 2Gi

env:

- name: ELASTICSEARCH_URL

value: http://elasticsearch.logging-efk.svc.cluster.local:9200 #设置为 es 的 handless service 的名称

- name: ELASTICSEARCH_HOSTS

value: http://elasticsearch.logging-efk.svc.cluster.local:9200 #设置为 es 的 handless service 的名称

ports:

- containerPort: 5601

name: kibana

volumeMounts:

- name: config

mountPath: /usr/share/kibana/config/kibana.yml #kibana 配置文件挂载地址

readOnly: true

subPath: kibana.yml

volumes:

- name: config # 跟上面的 volumeMounts 名称匹配

configMap:

name: kibana-config #对应 configmap 名称fluentd

创建 configmap

vim /home/efk/03.fluentd/00.fluentd-configmap.yamlapiVersion: v1

kind: ConfigMap

metadata:

name: fluentd-config

namespace: logging-efk

labels:

app: fluentd

version: v1

component: configmap

environment: dev

data:

# Fluentd 系统级别配置,主要用于指定缓冲目录

system.conf: |-

<system>

root_dir /tmp/fluentd-buffers/

</system>

# 容器日志输入配置,从 Kubernetes Node 上挂载的容器日志路径读取日志

containers.input.conf: |-

<source>

@id fluentd-containers.log

@type tail # 使用 tail 插件读取文件

# 读取 /var/log/containers 目录下的所有日志文件(Docker 容器标准输出日志)

path /var/log/containers/*.log

# 记录读取位置,防止重启后重复读取

pos_file /var/log/es-containers.log.pos

# 日志的 tag,用于后续匹配和路由

tag raw.kubernetes.*

read_from_head true # 从文件开头读取(首次启动时)

# 解析日志格式

<parse>

@type multi_format # 支持多种格式解析(json + 正则)

<pattern>

format json

time_key time # 指定时间字段

time_format %Y-%m-%dT%H:%M:%S.%NZ # RFC3339 格式

</pattern>

<pattern>

# 兼容容器输出格式: 时间 流(stdout/stderr) 内容

format /^(?<time>.+) (?<stream>stdout|stderr) [^ ]* (?<log>.*)$/

time_format %Y-%m-%dT%H:%M:%S.%N%:z

</pattern>

</parse>

</source>

# 异常堆栈检测插件,自动合并多行异常为一条记录

<match raw.kubernetes.**>

@id raw.kubernetes

@type detect_exceptions

remove_tag_prefix raw # 移除 tag 前缀,供后续 match 使用

message log # 异常消息字段

stream stream # stderr or stdout 字段

multiline_flush_interval 5 # 多行缓冲等待时间

max_bytes 500000 # 单条最大大小

max_lines 1000 # 单条最大行数

</match>

# 拼接分行的多行日志

<filter **>

@id filter_concat

@type concat

key message

multiline_end_regexp /\n$/ # 如果以换行结尾,说明是一条完整日志

separator "" # 拼接时不加分隔符

</filter>

# 注入 Kubernetes 元数据(如 namespace、pod 名、label 等)

<filter kubernetes.**>

@id filter_kubernetes_metadata

@type kubernetes_metadata

</filter>

# 将日志字段 `log` 解析为 JSON,对结构化日志进行结构化处理

<filter kubernetes.**>

@id filter_parser

@type parser

key_name log # 指定要解析的字段

reserve_data true # 保留原始字段

remove_key_name_field true # 成功解析后移除原始字段

<parse>

@type multi_format

<pattern>

format json

</pattern>

<pattern>

format none # fallback,用于非结构化日志

</pattern>

</parse>

</filter>

# 删除不必要的字段,减少存储体积

<filter kubernetes.**>

@type record_transformer

remove_keys $.docker.container_id,

$.kubernetes.container_image_id,

$.kubernetes.pod_id,

$.kubernetes.namespace_id,

$.kubernetes.master_url,

$.kubernetes.labels.pod-template-hash

</filter>

# 只保留打上标签 logging=true 的 Pod 日志

<filter kubernetes.**>

@id filter_log

@type grep

<regexp>

key $.kubernetes.labels.logging

pattern ^true$

</regexp>

</filter>

# TCP 输入源配置,支持其它服务通过 forward 协议发送日志

forward.input.conf: |-

<source>

@id forward

@type forward

</source>

# 日志输出配置:发送至 Elasticsearch

output.conf: |-

<match **>

@id elasticsearch

@type elasticsearch

@log_level info

include_tag_key true # 在 ES 中保留 tag 信息

host elasticsearch # Elasticsearch 服务名称

port 9200

logstash_format true # 使用 logstash 格式写入 ES

logstash_prefix k8s # 索引前缀为 k8s-yyyy.MM.dd

request_timeout 30s

<buffer> # Buffer 设置,确保高性能及可靠性

@type file

path /var/log/fluentd-buffers/kubernetes.system.buffer

flush_mode interval

flush_interval 5s

flush_thread_count 2

retry_type exponential_backoff

retry_forever true

retry_max_interval 30s

chunk_limit_size 2M

queue_limit_length 8

overflow_action block # 如果队列满则阻塞

</buffer>

</match>创建 daemonset

vim /home/efk/03.fluentd/01.fluentd-daemonset.yamlapiVersion: v1

kind: ServiceAccount

metadata:

name: fluentd-es

namespace: logging-efk

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd-es

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups:

- ""

resources:

- "namespaces"

- "pods"

verbs:

- "get"

- "watch"

- "list"

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd-es

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

subjects:

- kind: ServiceAccount

name: fluentd-es

namespace: logging-efk

apiGroup: ""

roleRef:

kind: ClusterRole

name: fluentd-es

apiGroup: ""

---

apiVersion: apps/v1

kind: DaemonSet # 使用 DaemonSet 保证每个节点上都部署一个 Fluentd 实例

metadata:

name: fluentd-es

namespace: logging-efk # 所在命名空间,需与 ServiceAccount 和 ConfigMap 一致

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile # Addon 管理器自动维护资源状态

spec:

selector:

matchLabels:

k8s-app: fluentd-es # 与 template.labels 匹配,标识 DaemonSet 的 Pod

template:

metadata:

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

# (已废弃)用于早期 Kubernetes 指定关键 Pod,不易被驱逐

spec:

serviceAccountName: fluentd-es # 使用具有 RBAC 权限的 ServiceAccount

containers:

- name: fluentd-es

image: zzwen.cn:8002/bosai/fluentd:v3.4.0

# 使用私有仓库镜像部署 Fluentd,可自定义包含插件的镜像版本

env:

- name: FLUENTD_ARGS

value: --no-supervisor -q

# Fluentd 启动参数:

# --no-supervisor 表示不使用守护进程模式

# -q 静默启动,减少日志输出

resources:

limits:

memory: 500Mi # 最大可用内存

requests:

cpu: 100m # 请求最小 CPU

memory: 200Mi # 请求最小内存

volumeMounts:

- name: varlog

mountPath: /var/log

# 挂载宿主机 /var/log 目录,读取系统及容器日志

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

# 挂载容器运行时日志目录,必须只读(避免影响容器)

- name: config-volume

mountPath: /etc/fluent/config.d

# 将 Fluentd 配置挂载到 Fluentd 的默认配置目录中

# tolerations 允许 Fluentd 在具有 Taint 的节点上运行,例如 master 节点

tolerations:

- operator: Exists

terminationGracePeriodSeconds: 30

# 关闭容器前保留 30 秒用于将 buffer 中日志刷新到目标

volumes:

- name: varlog

hostPath:

path: /var/log

# 挂载宿主机 /var/log,供 Fluentd 读取容器日志链接文件 (*.log)

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

# 容器运行时日志所在目录(日志是以 container ID 命名)

- name: config-volume

configMap:

name: fluentd-config

# 挂载我们之前定义的 fluentd-config ConfigMap 到 Fluentd 配置目录创建两个测试 pod

vim pod.yamlapiVersion: v1

kind: Pod

metadata:

name: counter

labels:

logging: "true" # 必须具备该标签,Fluentd 配置中才会采集日志

spec:

containers:

- name: count

image: busybox

args:

- /bin/sh

- -c

- 'i=0; while true; do echo "$i: $(date)"; i=$((i+1)); sleep 1; done'

resources:

limits:

memory: 200Mi

cpu: 500m

requests:

cpu: 100m

memory: 100Mivim pod-nginx.yamlapiVersion: v1

kind: Pod

metadata:

name: nginx-test

namespace: default

labels:

app: test

logging: "true"

spec:

containers:

- name: nginx

image: zzwen.cn:8002/bosai/nginx:1.22

resources:

limits:

cpu: 200m

memory: 500Mi

requests:

cpu: 100m

memory: 200Mi

ports:

- containerPort: 80

name: http

volumeMounts:

- name: localtime

mountPath: /etc/localtime

volumes:

- name: localtime

hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

restartPolicy: Always